Hateful & discriminatory content should be Ofcom’s top priority

TV and radio programmes that are unsuitable for children, or which contain hateful or discriminatory content, should be the priorities for Ofcom’s work in upholding standards – according to viewers and listeners.

A new study of audiences’ expectations in the digital world reveals broad support for broadcasting rules, which ensure audiences are protected while freedom of expression is upheld. And the research, commissioned by Ofcom, finds widespread agreement among audiences that society’s views around offence have shifted in recent years.

People told Ofcom that they expect regulation to focus on cases of content inciting crime and causing harm, even if it airs on smaller, non-mainstream channels or stations aimed at particular communities. Similarly, many want to see priority given to cases involving discriminatory content targeted at specific groups, particularly if it risks harming vulnerable people.

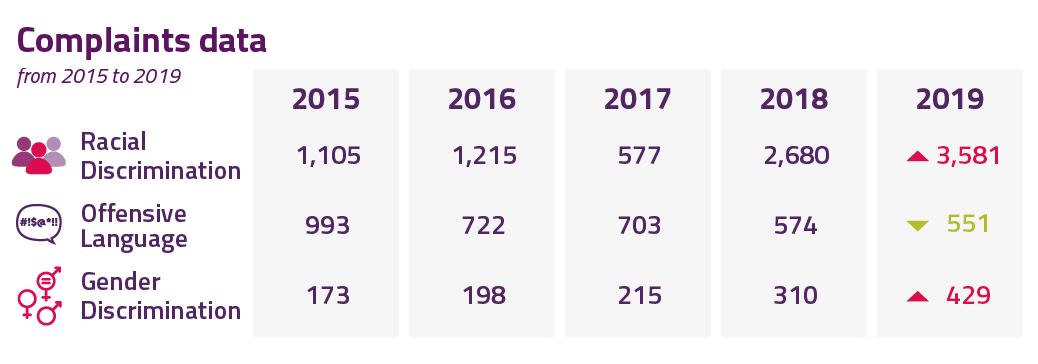

The pattern of offence-related audience complaints made to Ofcom over the last five years also reflects these shifting priorities. Concerns around swearing, for example, have trended downwards – falling by 45% between 2015 and 2019. In comparison, complaints about racial and gender discrimination have increased by 224% and 148% respectively, during the same period.[1]

Protecting viewers and listeners from harm

Ofcom’s job is to set and enforce broadcasting rules to protect viewers and listeners from harmful or unjustifiably offensive content, among other things. To help us ensure these rules remain effective and up to date, we periodically carry out in-depth research to understand audiences’ concerns, needs and priorities. This supplements the tens of thousands of audience complaints we receive each year.

Our latest wave of audience research involved workshops, focus groups and in-depth interviews with members of the public of all ages and backgrounds across the UK to ask for their views about content standards across TV, radio, catch-up, subscription and video sharing services.

What makes today's audiences tick?

Viewers and listeners generally think that people themselves are responsible for choosing what programmes they watch or listen to. They value the variety of content available today, and their freedom to access it any time in any place.

But there is also broad support for the role of regulation, and the role of broadcasters, in ensuring that content is appropriate and reflects audiences’ expectations. In particular, viewers and listeners told us that:

rules to protect children from unsuitable content are essential. Although participants also feel that parents and carers have a primary role in policing what their kids watch;

rules around incitement of crime, disorder, hatred and abuse are very important. People feel that the consequences of inciting hate crime are the most serious, and want to see these cases prioritised – even if the content airs on smaller or non-mainstream channels and stations aimed at specific communities;

discriminatory content against specific groups is more concerning than other offensive content, such as nudity and swearing, and should be prioritised. Many participants reflected on how attitudes towards race and sexuality have changed, pointing out that TV programmes in previous decades included language, storylines and behaviours that are now perceived as discriminatory;

they recognise that offensive content is subjective, and the importance of freedom of expression. But audiences want access to clear information about content in programmes to make informed decisions. Pre-programme warnings are seen as important in mitigating potential offence by telling viewers what to expect;

mistakes during live broadcasts are acceptable if they are genuine and unavoidable. Participants suggested that Ofcom action might not be necessary in the event of accidental on-air swearing, for example, particularly if an apology was made, or the language was made by a member of the public in a way that was beyond broadcasters’ control, and the programme was unlikely to be seen or heard by children; and

they are worried about a lack of regulation on video-sharing platforms. Participants felt that they were more likely to come across inappropriate or upsetting content accidentally on these sites, with rolling playlists, pop-ups, and unchecked user-generated content being cited as common concerns.

Ofcom comments on the findings

Tony Close, Ofcom's Director of Content Standards, commented on the findings. He explains:

"People in the UK are passionate about what they see and hear on TV, radio and on-demand. And thanks to social media, debates about programmes today are more animated and immediate than ever.

"Word spreads quickly about ‘must-watch’ shows that engage and inspire people; those that typify British culture and bring the nation together; and those that move us to tears. But equally, viewers and listeners know when broadcasters get it wrong or fall short of the standards they expect.

"A crucial part of our job at Ofcom is to listen to these views and act on them wherever necessary. Last year, that meant assessing around 28,000 complaints and reviewing almost 7,000 hours of programmes.

"But complaints figures are only part of the picture. It’s important that, from time to time, we carry out extra research to really understand viewers’ and listeners’ concerns, needs and priorities. This helps us to ensure our broadcasting rules remain effective and up to date.

"We know that audiences’ tastes, attitudes and preferences change over time. And we’ve seen significant shifts in social norms that have changed the kind of content they’re choosing to watch. A dating show entirely premised on full frontal nudity, even post-watershed, was once unthinkable. Nasty Nick’s dastardly deeds in the first series of Big Brother, which offended many in 2000, would seem less remarkable now after two more decades of reality TV. And racial stereotypes that were a feature of some comedy shows in the 70s and 80s are unacceptable to modern audiences and society.

"The people who took part in the research overwhelmingly agreed that rules protecting children from unsuitable content remain essential. And they also felt that tougher rules should be applied to online content. There was a clear call for action to be prioritised against content that incites crime or hatred, or discriminates against groups or individuals, over other offensive content such as nudity or swearing.

"Our job is to listen to those concerns, and balance people’s right of protection against their right to receive a range of information and ideas, and of course broadcasters’ right to freedom of expression. We want to make sure that we’re doing the best job we can in upholding standards on TV, radio and on-demand services. And the research offers an important window into the hearts and minds of modern-day audiences. This will help inform how we apply and enforce our broadcasting rules on their behalf."

Marked increase in the number of complaints pertaining to racial discrimination

Ofcom complaints data from 2015 to 2019 shows a marked increase in the number of complaints pertaining to racial and gender discrimination, while the number of complaints relating to offensive language have steadily declined.

From next year, Ofcom will take on new responsibilities for regulating video-sharing platforms which are established in the UK. These new rules will mean that platforms must have in place measures to protect young people from potentially harmful content and ensure that all users are protected from hate speech and illegal content. This is an interim role ahead of new online harms regulation. On 12 February 2020, in its initial consultation response to the Online Harms White Paper, the Government announced that it is minded to appoint Ofcom as the new regulator for online harms.

Click here to download a copy of the IPSOS Mori Audience expectations in digital world report for Ofcom.